Why you aren't feeling the AGI

While AGI creeps up on us, there will not be another "ChatGPT moment."

Audio version available here.

It’s been just over a month since OpenAI released GPT5. By all accounts, including comments from Sam Altman, the release was botched. Memories of that turmoil are now starting to fade. One of the issues was a fair amount of disappointment, as people were hoping the jump from GPT4 to GPT5 would feel as significant as the jump from GPT3 to GPT4. What many had been hoping for is rapid progress toward a system whose intelligence rivals that of humans, or “AGI.” With the jump in capabilities of a next-gen GPT system, people thought AGI should have at least felt noticeably closer. It didn’t. In this post I’ll explain why GPT5 really did represent as significant of a jump as people were hoping for, while at the same time why it didn’t feel that way. In fact, it’s unlikely we’ll ever feel again the same kind of seismic shift that we felt when ChatGPT first came out.

OpenAI has many more competitors now than it did when ChatGPT was first released. From a purely consumer-oriented point of view that competition is good, as it keeps the pressure on everyone to develop technologies that we’ll all find useful (as long as competition doesn’t mean companies will sacrifice safety considerations). However, in order to stay competitive, every model developer is forced to release their products as soon as they can claim that theirs is at least incrementally better than their rivals. OpenAI, for example, can’t wait two years between model releases. In that intervening time Google, Anthopic, X, Meta, Mistral, and several Chinese companies would release models that are superior to OpenAI’s last release, and consumers would stop using ChatGPT. As big as OpenAI has become, they still can’t afford to lose that many customers.

The result is that we get a series of incremental releases. GPT4 was released March 14th, 2023. GPT5 was released August 7th, 2025. In the interim, to stay competitive with their rivals OpenAI released GPT 4.1, 4o, 4.5, and their “o” reasoning series: o1, o3, o4 mini, etc., which were all stepping stones on the way to GPT5. Each of those releases were interleaved with those of their competitors: multiple Google Gemini models, Anthopic Claude models, DeepSeek models, etc.

It seems unlikely that the AI industry will change. It takes 1-2 years to develop what is truly a next-gen model, and yet to stay competitive, companies can’t wait that long to release something. So we get a series of partial releases, each incrementally better than the previous one, and when the next-gen model finally does come out, it just seems like another incremental improvement.

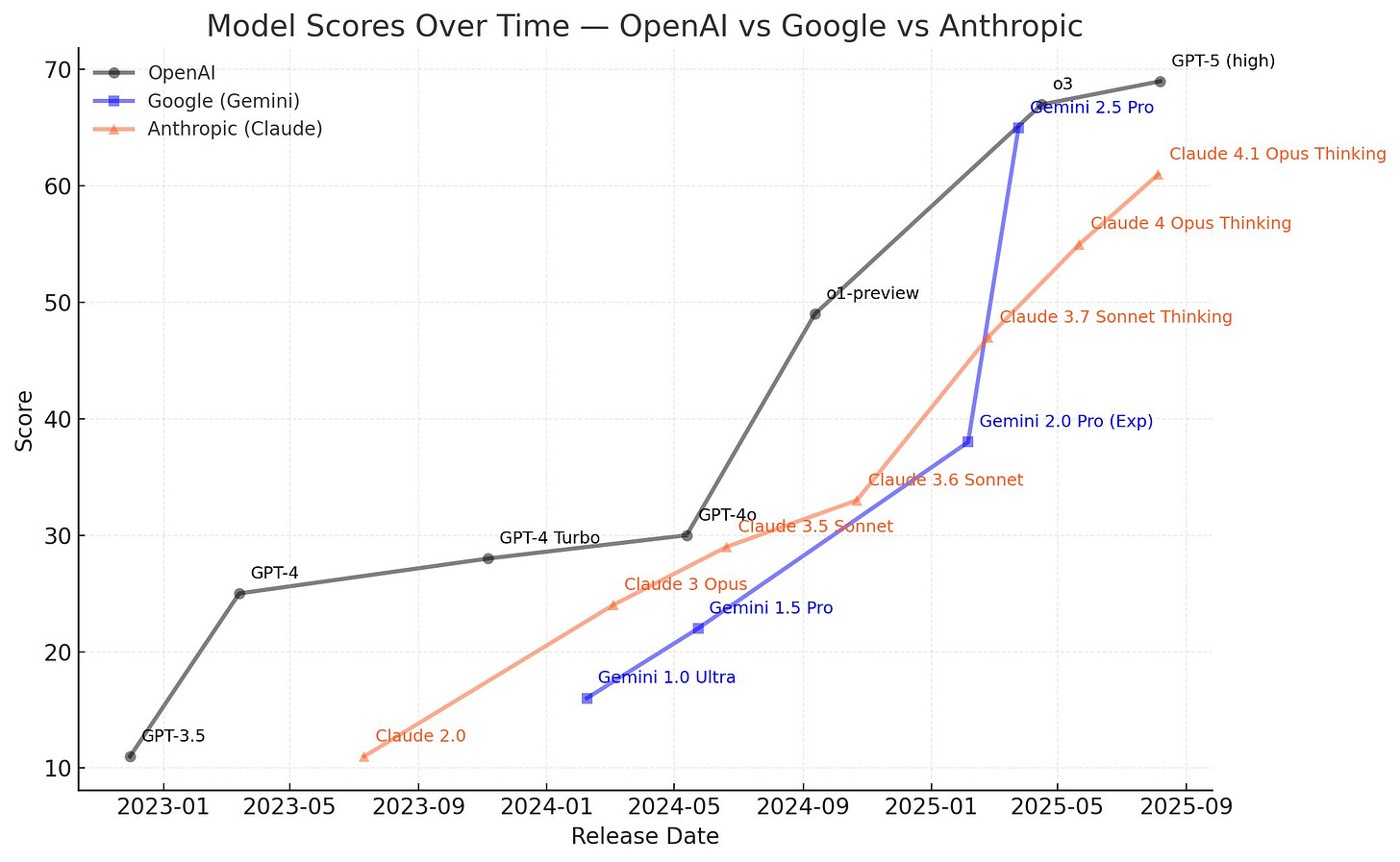

The following chart on LLM ability tells the whole story:

Notice GPT4 all the way down on the bottom left, and GPT5 on the top right. That’s a HUGE improvement, but the jump from o3, released just a few months before, to GPT5 is relatively small. When you look at all the competitors’ models, it’s easy to see why OpenAI couldn’t just wait two years to release GPT5 after they’d released GPT4.

There is another factor that explains why GPT5 disappointed many. When talking to it through the chat app, conversations just didn’t seem any more “intelligent.” (Putting aside the uproar over the “personality” shift from GPT 4.5 to GPT 5.) That’s because using AI for chat purposes is already quite amazing. It’s worth continually reminding ourselves that the things you can ask it about, ask it to do, etc would have seemed like pure magic just 3 years ago. It’s far from perfect, but if you imagine a scale of LLM chat quality that shows where things were at pre-ChatGPT, where things are at now, and where we might hope they’ll be in, say, 3 more years, I believe we’re already 90% there. If you think of “chat vibe” as a benchmark, it’s really becoming saturated. Shortly after GPT 5 was released Sam Altman said this himself in an interview:

The models have already saturated the chat use case. —Sam Altman

That doesn’t mean the models won’t get better. It just means there’s only room now for incremental improvements where each one won’t be so noticeable. Only when you look back over several such improvements will you see a difference.

The disappointment over GPT5 spurned several articles questioning whether AI model progress has plateaued. The one that got sent to me the most was published in the New Yorker, titled What if A.I. doesn’t get much better than this?. The subtitle was “GPT-5, a new release from OpenAI, is the latest product to suggest that progress on large language models has stalled.” Complete rubbish. When you track AI progress across all models, GPT5 fits along the same upward curve that it has been on for several years.

There is some validity to the New Yorker critiques. Back in 2022 there was a perception that researchers thought all we need to do to get to AGI is take GPT3 (the state of the art model at the time) and simply scale up compute and data. However, model developers never actually claimed this seriously. It’s more accurate to say that the claim was that we’ll hit AGI through a combination of these factors, together with periodic algorithmic and hardware improvements. Those predictions do seem to have panned out: scaling + algorithmic and hardware improvements is what has put AI capabilities on a fairly smooth upward climbing curve. There are no guarantees that AI development won’t stall out at some point, but so far it just hasn’t.

All of this presents a conundrum. If AI keeps improving at a steady pace, and yet most people aren’t feeling those improvements, then who does? The answer is anyone who works in a domain where those improvements really matter. There are now countless business applications. For example, I was recently taking to someone who works in medical records and billing, who discovered ChatGPT can now do hours of the more mundane parts of their job in a few minutes. Many areas of science and engineering see immediate benefits from more advanced intelligence. I think everyone has heard that software engineering is being completely transformed by AI tools.

With that said, I suspect that there are many more people in the world for whom advancements in intelligence are not relevant to their daily lives. Those people won’t directly feel the benefits of new models, feeding a general perception that AI is all hype, and all the money spent on it is creating a tech bubble of unprecedented proportions. The truth is that we can’t predict the future. It’s certainly possible that we are in a bubble, and any day now the bubble will burst. However, the primary evidence for that would be that there’s increasing money being spent on AI systems whose capabilities are not increasing proportionally. For now at least, that doesn’t seem to be happening.