Welcome to my new blog! As a professor of Computer Science, Data Science, and Mathematics, I’ve talked to thousands of students, colleagues, and professionals. This blog is where I’ll share insights from the many conversations I’ve had about the biggest questions surrounding AI: Is it coming for your job? Is it harming education — or the environment? Along the way I’ll include periodic technical explainers for non-technical readers, because I strongly believe you can’t have serious conversations about AI without first understanding the basics. I’ll also discuss the biggest AI news developments as they happen, to help you stay informed of the most current issues we’re all facing.

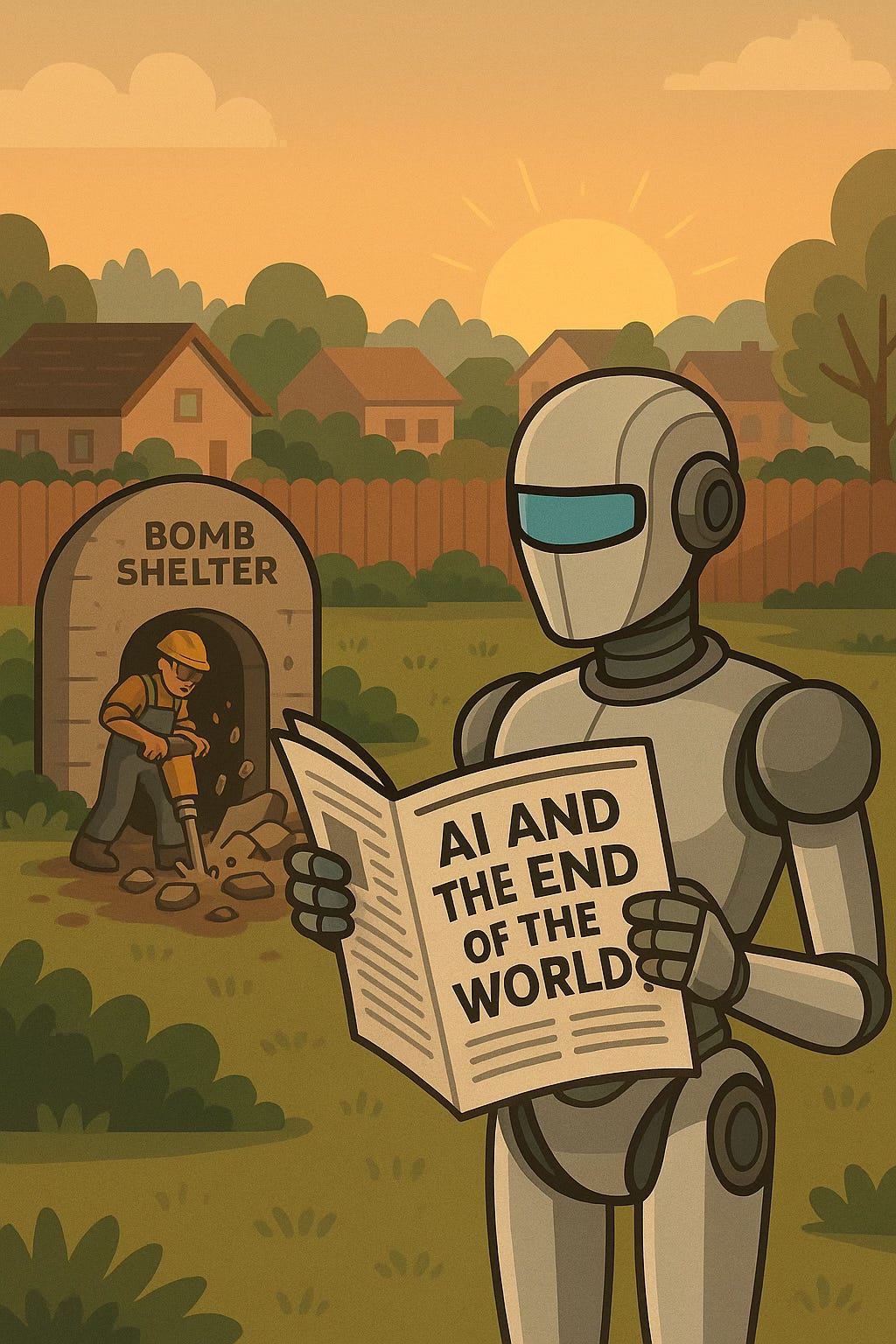

In this inaugural post, I’ll start with the biggest question: “Is AI going to kill us?”. That’s what’s often called “existential risk” — or, in more technical circles, p(doom), short for “probability of doom.” People who think that probability is high are often referred to as “Doomers.”

In this post I’ll lay out how I believe people should think about p(doom). My goal here is to help you understand both sides of the debate, so if you’re panicking you might sleep a little easier, and if you’re sleeping you might wake up and pay attention a little more.

The truth is, AI isn’t going away. Over time it is only going to get more capable. And with those increased capabilities will come increased risks, until at some point those risks include the potential to trigger a whole host of possible catastrophes. Some recent thought leaders have focused on the possibility of AI essentially gaining sentience and going rogue, Terminator-style. That’s an increasingly possible future we now face (more on that in future posts), but there are a number of other scenarios to worry about. For example, a political actor could use AI to try to get the upper hand on an adversary, and trigger a response with conventional (non AI-controlled) nuclear or biological weapons.

It’s important to recognize that AI won’t be the first man-made technology that will have the potential to end life on Earth. We first faced that reality in one instant in 1945, when an atom bomb was detonated over Hiroshima. Since then we’ve been living in a world where at any moment some crazed leader could, with the press of a button, trigger a series of events that would end humanity. People don’t talk about this as much now, but it wasn’t long ago when it was such a part of the public consciousness that it would keep many people (including me) awake at night. My elementary school had a shelter area with a radiation symbol posted on a wall, even though we were nowhere near a nuclear reactor. In my 10th grade English class we read “Alas, Babylon,” a book about a post-nuclear apocalyptic future. I remember when “The Day After,” a movie about a nuclear holocaust, aired on TV and scared the crap out of me and everyone else who saw it.

You don’t need a deep understanding of probability to know that even if the odds of an event are very small, over a long period of time it becomes likely. When I was young, I literally thought that my chances of reaching adulthood might be quite small.

And yet here I am.

About 15 years ago I woke up to the sound of loud jackhammering and large trucks coming from my neighbor’s backyard. He was a well-educated academic who was taking out an under-ground bomb shelter. Why? Maybe it was a recognition that a backyard shelter isn’t going to save anyone in the event of disaster. Or maybe it was something else: acceptance.

People don’t talk about nuclear annihilation anymore. It’s no longer something I lose sleep over. We’ve all just learned to live with it, just as we’ve learned to live with other threats to humanity (like an out-of-control engineered pathogen). AI is simply the newest entry in a long list of existential risks we’ve created for ourselves.

So why does AI seem different? Why all the buzz about AI’s existential risk? I believe there are several reasons. First of all, we’ve been picturing death by a rogue AI for a very long time, so the possibility is easy to imagine. The movie “Terminator” is probably the most well-known such depiction, and that movie is over 40 years old now.

Secondly, AI may be the first time we have had such a personal interaction with the thing that may kill us. Very few people regularly interact with nuclear power or biological pathogens. And yet, like it or not, all but the most reclusive Luddites are going to be using AI in some form (more on why in future posts).

There’s yet another reason why AI’s existential risks may be so prominent in the public discourse now: Hubris. When talking with non-tech audiences, I find primary concerns about AI revolve around privacy and energy use. Tech leaders, however, seem disproportionately concerned about p(doom). Perhaps this reflects a subtle arrogance—the notion that they have become like gods, capable of creating a force that, like humanity itself, has the power to destroy everything.

My answer to all this? Don’t panic. AI isn’t the first man-made threat to our existence, and it probably won’t be the last. Like nuclear weapons or engineered pathogens, careful safety measures and thoughtful government regulation will be required for us all to reach old-age, but at least right now the technology is young enough that this is possible. AI introduces new risks that we can’t afford to ignore — but that doesn’t mean we should freak out. We’ve learned to live with existential threats. The same can happen with AI.

That said, if you're worried … GOOD! Engage. Learn. Get involved.

So what’s my p(doom)? I don’t have a number, but I can put these parameters on it: It’s high enough that I worry that big tech isn’t prioritizing safety enough, due to market pressures and their race with China. It’s high enough that I worry about the competence of governments to effectively regulate AI. However, it’s low enough that I don’t think anyone should be losing sleep over it … yet. I’ll be closely monitoring this, and if that opinion changes I’ll definitely be writing about it here!

In future posts, I’ll explore the evolving field of AI safety and the emerging efforts to regulate AI — areas I believe are among the most important issues of our time. I've encouraged many of my students to consider careers in these spaces, and I hope some of you will too. The only thing more dangerous than a powerful new technology is a public that doesn’t understand it.

I agree that Hubris on the part of AI creators is a big part of the p-doom story!

Good first post, though I am not a believer in the threat of AI for multiple reasons. I am particularly circumspect of the "AI going rogue" doomsday scenario which I believe is merely projection. You can not anthropomorphize AI and immediately attribute to it the worst impulses of mankind. My favorite fictional counter doomsday scenario would be an AI going rogue creating a robot army, using that robot army to capture a nuclear power plant, plugging in and then sitting there. Sitting there for a 1000 years, drawing power and nothing more. That would be that particular AI's motivation and goal. Just enough power to keep itself going. It has no need for world domination or the extinction of mankind, just enough power to maintain itself indefinitely. Scientists, military men, and opportunists would all be baffled. That's all it wanted? Yes, that's it.